The purpose of caching is to make frequently accessed or hard-to-generate data easily accessible by users. In designing a data-intensive application, caching is employed to reduce the latency and overhead cost of retrieving data from its source ensuring quick access and availability to its end users.

While the definition and purpose of caching are clear, we tend to have the problem of determining what data needs to be cached and how we should go about caching this data. Every application has unique requirements so there's no one size fits all solution to these problems.

In this article, we are going to explore some of the approaches and eviction policies used in caching.

Caching Approaches

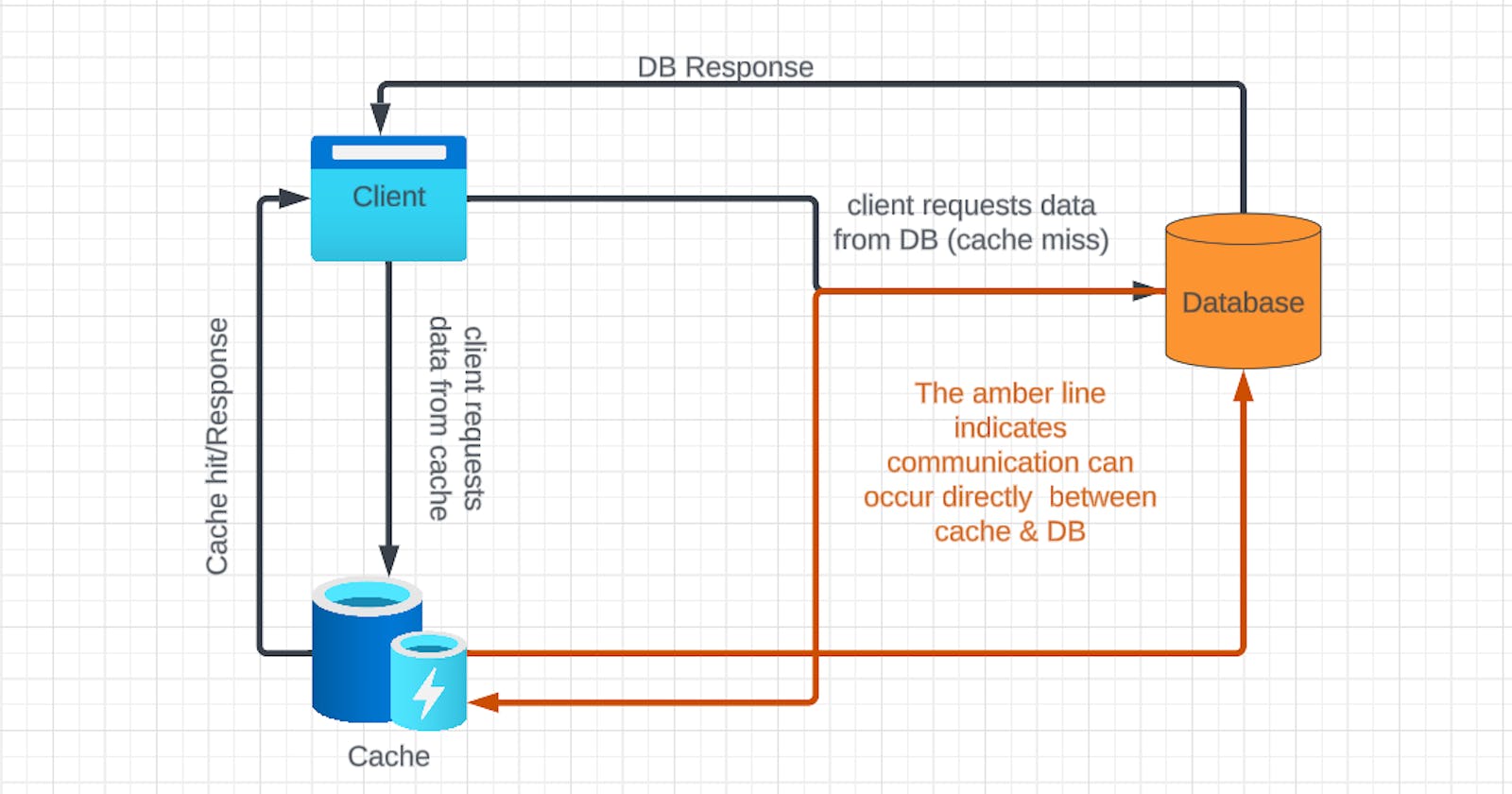

Before exploring the different approaches to caching, I will like to make it clear that "Caching Approaches" is distinctively different from "Caching Strategies". Caching Approaches dictate the criteria or logic at which data should be cached while caching strategies describe how caching is implemented, managed, and integrated into the system architecture.

To learn further on what caching strategy is and its different types click here. Now let's discuss the different approaches to caching.

Content-Based Caching: This approach involves caching data based on its content and characteristics. The data to cache is determined based on the nature or properties of the data. Examples of such data include expensive-to-generate data (expensive queries), large static or media files, and data sharing across multiple users.

To implement this approach we can consider using the Write-Through and Cache-Aside caching strategy.

Usage/Popularity-Based Caching: This approach involves caching data that is frequently accessed or popular among users. Data is cached based on metrics such as the number of accesses, user interactions, or other usage patterns. For example, frequently visited web pages, frequently requested API endpoints, or frequently accessed database records. How you track these metrics should be specific to your use case.

We can also implement this approach using the Write-Through and Cache-Aside caching strategy.

Time-Based Caching: This approach involves caching data for a specific period regardless of how often they're accessed or how expensive to generate the data is. This approach ensures that frequently accessed data are always cached without the need for explicit visit tracking. To implement this approach we can consider using the Write-Through, Cache-Aside, Read-Through, and Write-Back caching strategy.

Lazy Loading: This approach involves caching data only upon request. It avoids caching data that are rarely accessed, saving resources and storage space, unlike Time-Based Caching. To implement this approach we can consider using the Write-Through, Cache-Aside, Read-Through, and Write-Back caching strategy.

Those are the 4 most common approaches used in caching data. The approach you choose to use should be directly related to your caching and application's objective.

Eviction Policies

We've learnt the different approaches to caching and we are now well informed on what strategies and approaches to use and what data to store. With this understanding, how do we create space or empty our cache storage when it's full?

This is why we have eviction policies. Policies are put in place to remove items or entries from our cache storage when we hit the storage limit. Below are some of the eviction policies commonly used.

Least Recently Used (LRU): Assuming our cache storage is represented as a JSON array with the capacity to only contain 3 items and we wish to cache another item.

[ {"item_name":"1 item", "last_accessed_at":"10.01.2014 11:34"}, {"item_name":"2 item", "last_accessed_at":"09.01.2014 10:14"}, {"item_name":"3 item", "last_accessed_at":"12.01.2014 14:54"}, ]To create space for the new entry, using a timestamp, the Least Recently Used (LRU) policy will remove the item whose

last_accessed_atis the lowest as it's the item that is assumed to be rarely accessed.Least Frequently Used (LFU): While the LFU sounds similar to LRU, their implementation is different. The LFU is a popularity-based policy that uses a counter to determine the items which are least accessed in the cache storage. Using our JSON array as an example, we will add a counter to the object and omit the

last_accessed_at.[ {"item_name":"1 item", "access_counter":6}, {"item_name":"2 item", "access_counter":5}, {"item_name":"3 item", "access_counter":10}, ]This counter is incremented every time an item is accessed. To create space for a new entry, the Least Frequently Used (LFU) policy will remove the item whose

access_counteris the lowest.Most Recently Used (MRU): This policy opposite to LRU removes the most recently accessed items in the cache storage. The idea behind this eviction policy is to cater to special cases where recently accessed items have the lowest chances of being accessed again in the future. An example of such special cases would be a library of books.

If a user completes reading a book, unless they want to reference a page or section in that book, they're less likely to repeat the same book. To create space for a new book in the bookshelf (cache), you remove the book which your user just recently completed.

Now you need not worry about implementing these eviction policies yourself as most in-memory data store tools like Redis handle them perfectly for you and the above are the most used/supported policies in most tools. To learn more about other policies, please see the links attached in the references section.

Conclusion

In conclusion, caching is an important aspect of designing a data-intensive application and choosing the right caching approach, strategy, and eviction policies will significantly affect how well your application will scale. Learn further by visiting the links attached in the references section.